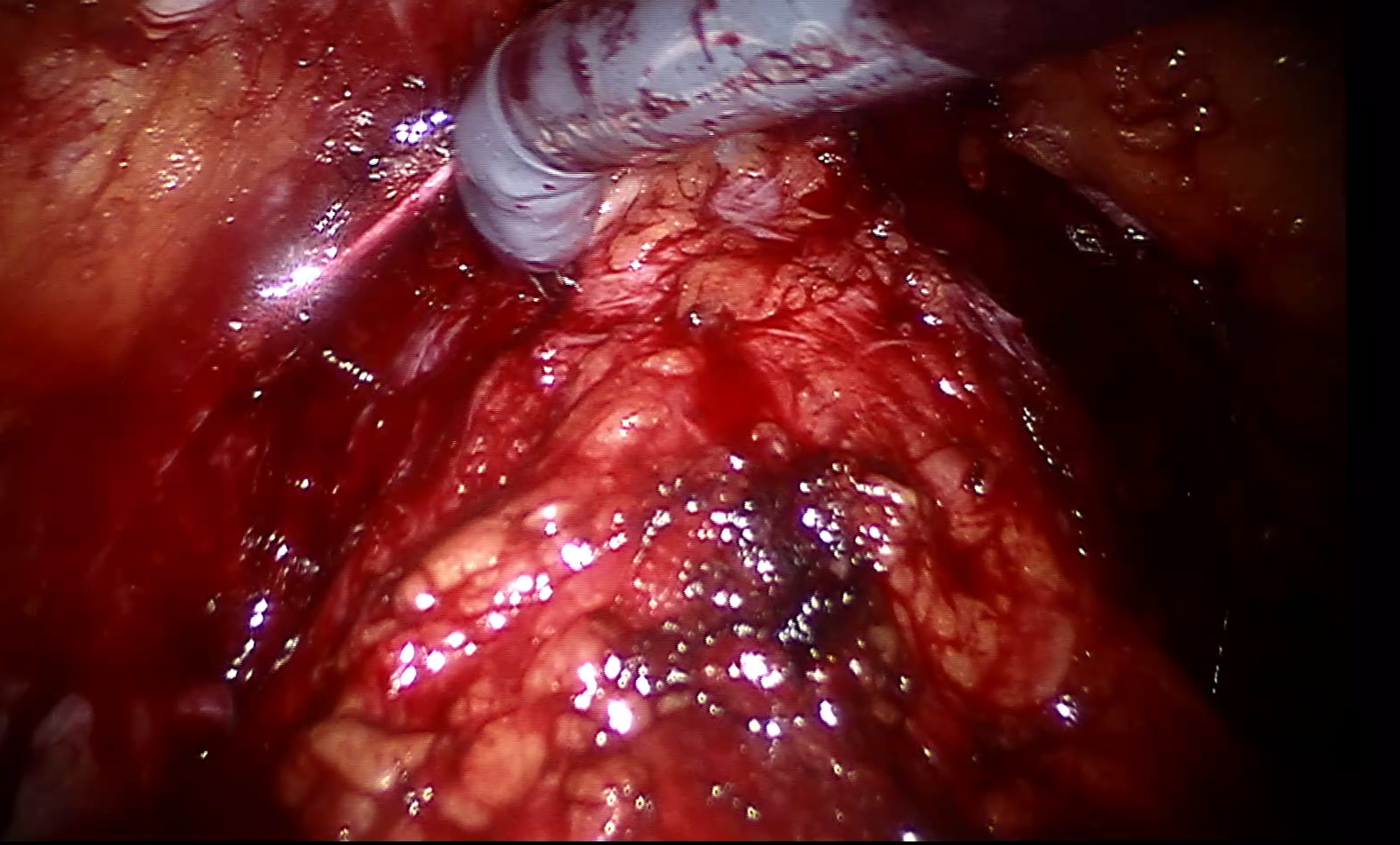

Motivation : The project aims to make Augmented Reality (AR) feasible for robot assisted radical prostatectomy (RARP) procedure. Primary goal of RARP is to remove cancerous prostate, while a secondary goal is to preserve important anatomical structures attached to the prostate. The preservation of these structures is important for the post-surgical quality of life of the patient. Prostate is encapsulated by Nerve Vascular Bundle (NVB) responsible for sexual function and urethral sphincter responsible for continence function. To remove the prostate while preserving these structures is not trivial as the demarcation between cancerous regions and these structures is not visible in the endoscope image. As can be seen in the image, the difference between cancerous regions and these anatomical structures is not visible with naked eyes in the endoscope image. This can lead to occurrence of positive surgical margins after surgery, indicating incomplete removal of cancer.

Solution : Therefore, in my PhD I built an AR system for RARP, to overlay a patient's MRI (showing cancer and anatomical structures) on the endoscope camera image. The demo can be seen in the video below. The video shows the endoscope view of the da Vinci surgical system. The video shows a prostate phantom (blue) with and without AR overlay. For my PhD I estimated the calibrations (Hand-eye and camera) to correctly overlay the MRI information on the camera image and built and evaluated various AR visualization strategies.

We have ethics approval for the clinical study. Our many techniques have been translated and used in real surgeries at VGH. Our current team consists of Prof Tim Salcudean, leading the project, Dr Peter Black, a urologist at VGH, Dr Silvia Chang, a radiologist, two graduate students (including myself) and many undergraduate interns who join the team for short-term. We have Prof. Nassir Navab, an expert on medical AR and Prof. Sidney Fels, an expert in perception and Human computer interaction (HCI) to provide supervision for designing user-friendly AR visualizations for surgery.

References

Kalia, M., Avinash, A., Navab, N., & Salcudean, S. E. (2021). Preclinical Evaluation of a Marker-less,Real-time, Augmented Reality Guidance System for Robot Assisted Radical Prostatectomy. InternationalJournal of Computer Assisted Radiology and Surgery. (In Press)

Samei, G., Tsang, K., Kesch, C., Lobo, J., Hor, S., Mohareri, O., ... & Salcudean, S. (2020). A partial augmented reality system with live ultrasound and registered preoperative MRI for guiding robot-assisted radical prostatectomy. Medical image analysis, 60, 101588.